The last article in this series focussed on containerization and virtualization and how they are different from one another. We discussed how docker works on containers giving development and configuration powers to developers and SysAdmins. Docker enables software to work on different containers. In this article, we will begin our discussion on the Docker platform. We will discuss the Docker architecture and various components of Docker.

The following topics will be the highlights of this post:

- What is Docker?

- Features of Docker

- What are the benefits of Docker?

- Docker Architecture

- Docker Workflow

- Engine of Docker

- Docker Image

- Docker Container

- And, Docker Registry

- When should we use Docker?

- Standardized Environments

- Disaster Recovery

- Faster and Consistent Delivery of Applications

- Code Management

What is Docker?

As we know, containers are lightweight components/objects which we can use to package an application along with its dependencies, libraries, etc., and deploy it. Docker is one such container platform, and we can define it as a software platform with advanced OS virtualization using which we can create, deploy, and run applications.

Although we can create containers without Docker, Docker simplifies and accelerates the application deployment or workflow. Using Docker, we can choose the product-specific deployment environment that will contain its own set of tools and application stacks. Applications build with Docker are simpler, easier, and safer to build, deploy, and manage containers.

Docker is essentially a toolkit that enables developers to build, deploy, run, update, and stop containers by using simple commands available with Docker API. Unlike Hypervisors that VM (Virtual Machines) uses, Docker achieves virtualization on system-level using containers.

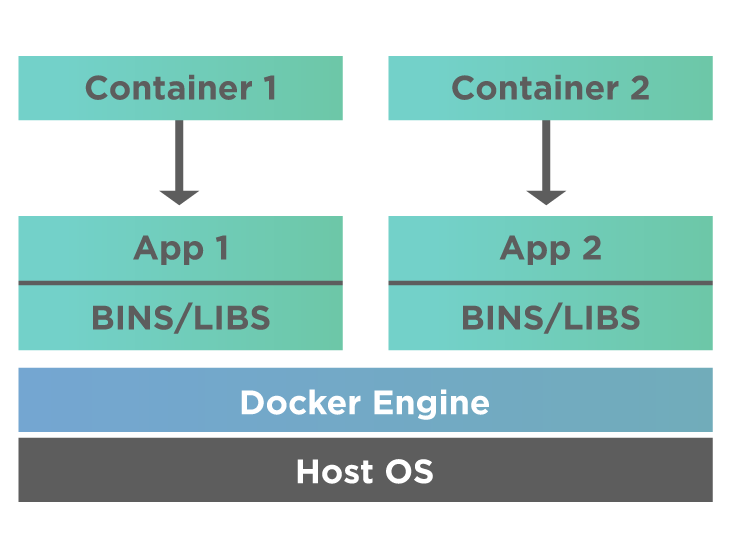

The following diagram shows a typical Docker System on a Host OS.

As shown in the above diagram, multiple applications run on the host machine. Each application runs on a separate container. The container holding each application also has its own set of dependencies and libraries. Thus each application is independent of others. The applications do not interfere with one another. So we can prepare a container with different applications installed and distribute, which can then be run and can replicate for verification and other purposes.

Docker containers run on top of the host system in an isolated environment. It doesn't interfere with host system hardware (unlike VMs that communicate with hardware). Hence, we can have a container with a Linux image (with the help of Hyper-V) and run on a host with Windows OS. All the apps (APP1, APP2, etc.) run as Docker containers. Each container as part of the Libs/binary contains a basic/minimum Linux operating system to manage the interdependency within the container.

Next comes the Docker Engine. The Docker Engine is an API used by applications to interact with the Docker Daemon. Docker Engine is used to run the Operating system. We can access Docker Engine using any of the HTTP clients.

We will learn more about Docker Engine later in this article.

Features of Docker

Some of the essential features of the Docker platform are listed below:

- Docker provides smaller footprints of the OS using containers, thus reducing the development size.

- Teams can focus more on various units like Dev, QA, Operations, etc., using containers rather than on readying the systems for them. So using containers, we can work seamlessly across the applications.

- Docker applications using containers can be deployed anywhere on physical machines, virtual machines, or even on the cloud.

- Docker containers are lightweight and are easily scalable. Their maintenance cost is also low.

What are the benefits of Docker?

Below given are some of the benefits of Docker.

- Generally, the QA team has to set up an entire development environment for an application, including its dependent software and binaries, to test the code. But with the Docker platform, the QA team need not do all this work. They have to run the container, and everything sets. It saves them a lot of time and energy.

- With applications and their dependencies packed in a container, there is consistency in the working environment across the machines/environment involved in this process.

- We can effortlessly increase the number of systems involved and deploy application code on them. Thus scalability is ensured easily with the Docker platform.

- Using an advanced tool like Docker, the development, deployment, and distribution of applications is faster and easy.

- Docker platform is time-saving, simple, and can easily integrate into the existing environment.

- Using the Docker platform, we can run an application in different locations, whether physical, virtual, or on cloud (private or public). Thus, Docker is flexible and portable.

- With Docker, we have a more innovative solution for application development, deployment, and distribution.

Now let us move on to discuss Docker architecture and the Docker components workflow.

Docker Architecture

The Docker architecture contains the following components:

- Docker client – client triggers interactions with the Docker Host.

- Docker Host – Docker host runs a Docker daemon and a docker registry

- The Docker Daemon - is a component that runs inside the Docker Host and handles the images and containers.

- Docker registry - The docker registry stores the docker images. Docker hub is one such registry, but you can have your own too.

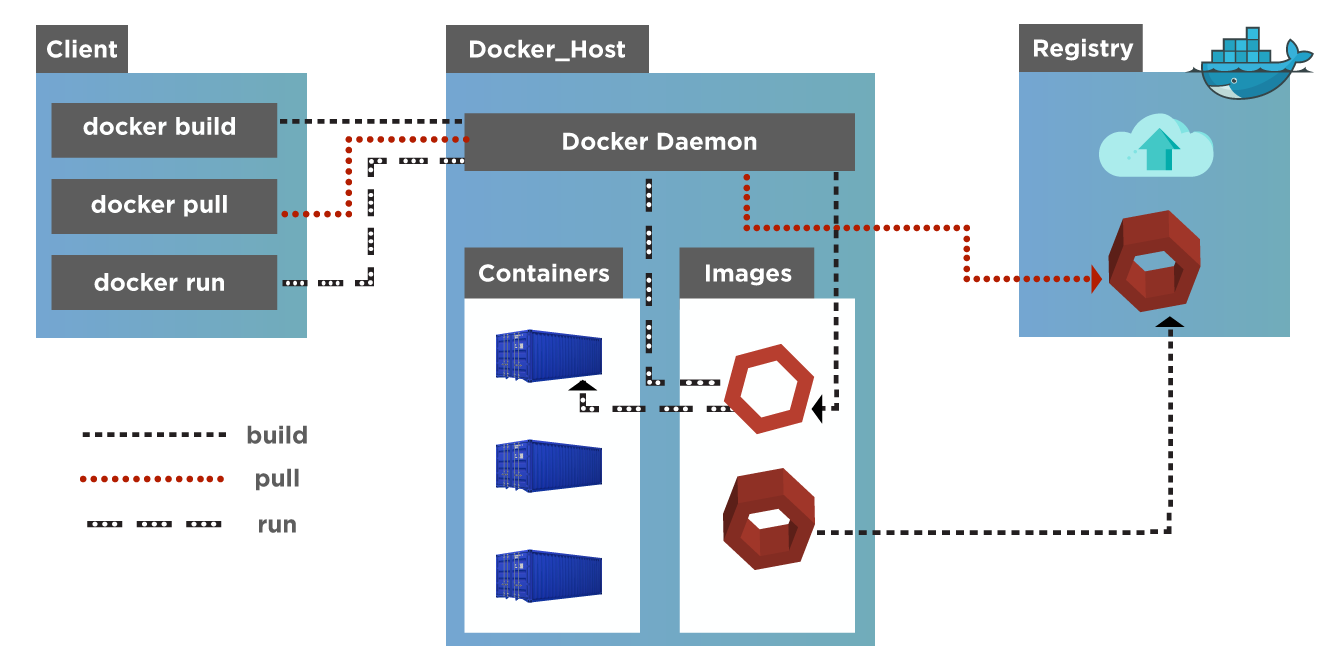

A basic Docker Architecture diagram is as shown below:

The above Docker architecture diagram shows the various components mentioned above connected to each other.

- We have a Docker Host that runs Docker daemon.

- We also have a Docker registry that maintains Docker images and containers.

- Then we also have a client communicating with Docker Host.

The functionality or workflow of the above docker architecture diagram is as described below. Also, let's learn in detail below about the various aspects of Docker Architecture.

Docker Workflow

The following steps are to follow when different components in the diagram above communicate with each other.

1.* A CLI (client) issues a 'build' command to the Docker Host. The Docker daemon running on Docker Host then builds an image based on the client's inputs through the command. This image then gets saved in the Docker registry. The registry can either be a local repository or a Docker Hub.* 2. Docker daemon either creates a new image or pulls an existing image from the Docker registry. 3. Finally, Daemon creates an instance of a Docker image. 4. The client then issues a "run" command. It triggers the creation of a container which then executes.

The above steps describe a simple workflow of a typical Docker ecosystem. Let us discuss each of the Docker components in more detail.

Docker Engine

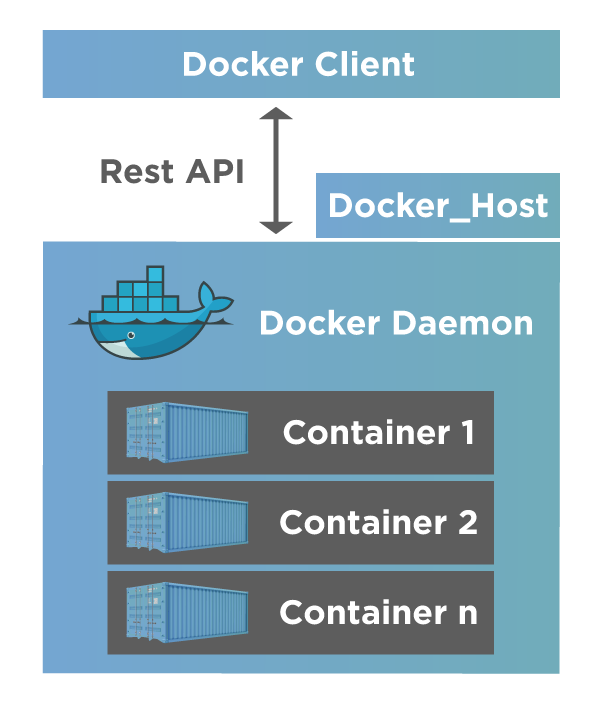

Docker Engine is the heart/ main component of the Docker system. It is open-source and used for containerization with Docker. The docker engine installs on the host. Docker Engine uses client-server technology:

- It has a daemon process that acts as a server. A Docker daemon is a background process. The daemon process is responsible for managing images, containers, storage volumes, and networks.

- A Client or a Command Line Interface (CLI).

- Additionally, it has a Rest API that the CLI client uses and a Docker daemon to communicate and instruct.

The following diagram shows a Docker Engine on Linux Operating System.

The above image shows the Docker Engine in a Linux Operating system.

- We can see a Docker client can be accessed using a terminal.

- A Host system runs in a Docker daemon.

- The above diagram also shows that Docker Host can also reside on a different machine than the client.

- The images build and containers execute by passing commands from the client to the daemon through Rest API.

Note: In the case of Windows or Mac, an additional "Toolbox" component is present inside the Docker host. Docker Toolbox is used to quickly install and set up the Docker environment on Windows or macOS. Toolbox installs all Docker components, including Docker Client, Machine, Compose(for Mac), VirtualBox, Kitematic, etc.

As we proceed with the Docker tutorial series, we will discuss these topics in detail in upcoming articles.

Docker Image

A Docker Image builds containers. We can view a Docker Image as a "source code" of the containers. Images have pre-installed software, they are portable, and we can even use pre-existing images instead of building a brand new one. All this makes deployment more straightforward and faster.

Docker images are building blocks of Docker containers and serve as templates for Docker containers in simpler terms.

We will learn more about Docker images in our subsequent articles.

Docker Container

Docker achieves containerization through containers. If we view the Docker Image as a template, then a Docker Container is a copy of that image. So we can have multiple copies of one image, which means various containers.

Containers are organizational units in docker. When we say we are running or executing a docker container, we are running an image. Containers draw an analogy from cargo containers.

Like cargo containers, we can pack the software, move it, ship it, modify, create, manage, or destroy it.

Docker Containers hold the entire package, including application code, dependencies, binaries, etc., to efficiently run an application without any interference.

We will come up with a separate topic on Docker containers in our Docker series that will discuss Docker containers in detail.

Docker Registry

Docker Registry is kind of a repository used to store Docker images. Registries can be public or private. Public registries are called "Docker Hub" and are similar to online code repositories like GitHub. We can also store images privately in Docker Hub and is accessible to millions of users.

When should we use Docker?

Since its inception, Docker has proved to be an excellent tool for developers and system administrators alike. We can use it in multiple stages of the DevOps cycle and the rapid and efficient deployment of applications.

Using Docker, we can package an application with all its dependencies into a Docker container and run it in multiple environments. Docker containers provide an isolated environment for the applications to run to have multiple containers running simultaneously on the same host without interfering with each other.

Let us now discuss some places where we can use Docker.

Standardized Environments

Docker is an excellent platform for continuous integration and helps to make the development environment repeatable. This repeatable development environment ensures that every team member works in a standard environment, and each team member is aware of changes and updates at every stage of development.

Disaster Recovery

Software development is prone to risks owing to unwanted situations and disasters. It can halt the development cycle and incur losses. But Docker mitigates the risk. Using Docker, we can easily replicate the functionality in case of any problems and replace it with a new platform. Alternatively, we can also roll back our development to an earlier version or revision if needed. So a disaster-prone development environment can use Docker.

Faster And Consistent Delivery Of Applications

Docker is a portable platform and helps in faster deployment. It simply pushes the ready software into the production environment. It is easier for developers to complete all the software development stages using a Docker platform.

Code Management

Docker provides a consistent environment for code development as well as code deployment. It can also help us standardize the development environment so that every team member has access to a consistent environment. All of these factors result in better code management. Thereby, it makes the system more user-friendly and scalable.

Key TakeAways

In a nutshell, Docker is a popular platform nowadays used for continuous integration and development.

- Docker helps us mitigate the risks in Software development by allowing us to restore images or retrieve previous versions or features.

- Moreover, the Docker consists of Docker client (CLI), Docker Host, and Docker Registry.

- Docker Host generally contains a Docker Daemon process that runs in the background. Docker registry stores Docker images and containers, which the Docker host accesses.

- We use Docker in many applications where we require a standardized environment, efficient code management, quick recovery from errors, and faster, better performance.

In our subsequent tutorials, we will go into detail about each of the docker topics.