The term containerization is associated with the use of containers to deploy applications. Containerization is not a new concept but nowadays is popular for efficiently deploying docker applications. These days containers and dockers have widespread adoption by different companies like Salesforce, Facebook, Google, and Netflix to make large teams more productive and improve resource utilization. Let's understand the concept of containers, their evolution, and how they have become an integral part of software development these days by covering the details in the following topics:

- Life before containers.

- What is Virtualization?

- Advantages and Disadvantages of Virtualization/VMs?

- What is Containerization?

- What is a Container?

- Also, what is LXC - Linux Container Project?

- When should we choose LXCs or Docker Containerization?

- When should we use Containerization?

- Containerization vs.Virtualization.

Life before containers

The deployment of applications before the era of containers was mostly “Never ending installation and configuration”. Whenever we had to build and run the application earlier, we had to go through three steps every time we came up with a new application.

- Make a machine or Virtual machine (VM) ready: First and foremost, we would ready the machine or any VM by installing Operating System (OS), make it up to date with the latest patches, install all dependencies that the application will require, etc.

- Install the application code and configure it: Once we get the machine ready, we would install the application code on it and configure it so that the application code can run on this machine.

- Maintain the machine: Once the machine was ready, we had to maintain it in a sense to ensure that it has all the latest installations, patches, security updates, etc.

Now consider we have an e-commerce application like Amazon to function using this traditional approach. Certain e-commerce functions like product listing, selection, add-to-cart, account, etc., are continuously executed and in huge volume. So that this e-commerce application functions properly round the clock, each department should carry out its functions with the help of a large number of servers.

To maintain such a system using the approach mentioned above, we would frequently run into some severe problems like:

- Server updates were missed: With hundreds of servers to maintain, there is bound to be a situation wherein we miss some servers while updating or patching. Also, all these servers may not have similar configurations, and thus it would not be easy to update, patch all the servers at once or simultaneously. The deployment also would be difficult in such a system.

- Intermittent errors: With such a vast scale of servers and functionality, the system is prone to intermittent errors.

- Multiple layers to troubleshoot: Once an error occurred, we had multiple layers to troubleshoot as we have a large number of servers and their complex configurations.

- A complete nightmare to troubleshoot: And with such large-scale operations, it would be a complete nightmare to troubleshoot the entire system.

So, with all these problems and shortcomings, it becomes difficult to maintain and run this type of system, especially when the application is time-critical.

One of the solutions to overcome some of the problems above is using Virtualization. Let us now understand more about what is Virtualization?

What is Virtualization?

Virtualization is a technique to import one or more guest operating systems on top of the host operating system.

This way, software professionals could have multiple operating systems on different virtual machines. These virtual machines will be present on the same host machine sharing the same resources such as secondary and primary memories in the system. Thus virtualization eliminates the requirement for extra hardware resources for running multiple guest operating systems. We can have more than one VMs on a single host machine. Each VM may have varied configurations, and as the name suggests, you get a new "machine" but "virtually".

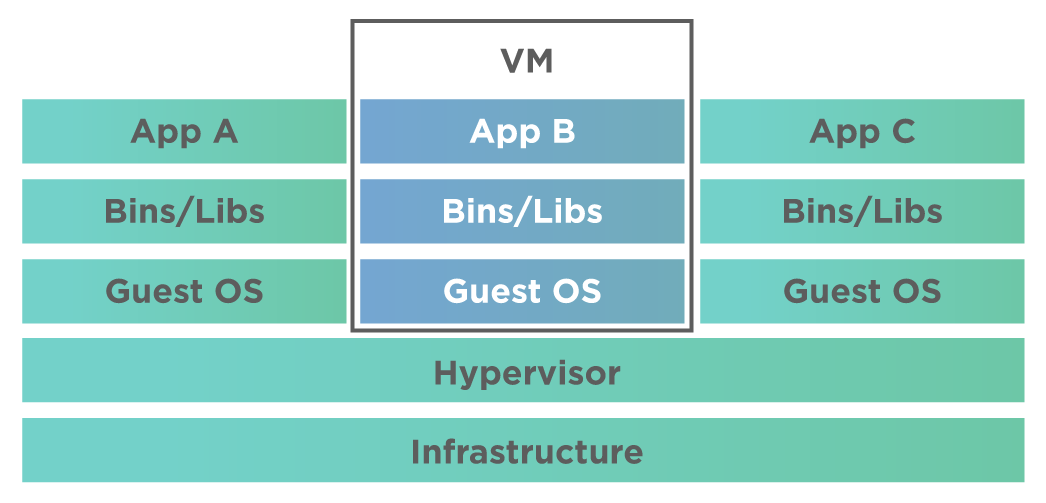

The following screenshot shows a virtual machine (VM) implementing Virtualization.

As we can see in the above diagram,

- We have a host operating system. This host operating system has three guest operating systems that are running on VMs.

- The image also shows that Virtual Machine(VM) runs on a ‘guest’ OS. It is because it has virtual access to the host resources.

- Virtualization allows us to run multiple operating systems on the same host machine. Also, the need for infrastructure is minimum as resources are shared.

- Also, a Hypervisor is computer software, firmware, or hardware that creates and runs/ executes virtual machines. A computer on which a hypervisor runs/ executes one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. To know more about Hypervisor, please refer here.

But this system is not without shortcomings. For example, VMs on the same host lead to performance degradation as VMs use a chunk of system resources. Also, VMs using virtualization take time to boot up (almost a minute), which is quite long, especially in time-critical applications.

Advantages and Disadvantages of Virtualization/ VMs

The following table depicts some of the advantages and disadvantages of Virtualization/VMs.

| Advantages of VMs/Virtualization | Disadvantages of VMs/Virtualization |

|---|---|

| The same host machine can have multiple operating systems running using VMs. | Since we run multiple VMs on a single host machine, the performance degrades. |

| In case there is a failure, recovery, and maintenance are easy. | Hypervisors using which we achieve virtualization are not very efficient. |

| Since the need for infrastructure is not much as it is shared, the cost reduces. | The boot-up process takes comparatively longer. |

The drawbacks/disadvantages discussed above, mainly we can overcome the performance by using a new technique called "Containerization".

What is Containerization?

A containerization is a form of operating system virtualization, wherein we make use of isolated user spaces called "Containers" to run applications. These containers use the same shared operating system (OS).

As discussed in previous topics, traditional systems that require us to install applications, their dependencies, binaries, etc., are not very efficient. Virtualization takes away some of the shortcomings of these systems, but still, problems remain majorly with performance.

So why not just have a system wherein we could bundle everything like application code, support binaries, dependencies required by the application, and configuration code into a single entity and install it only once?

To understand containerization, let us draw an analogy here. Let's say person A has a large Hindi songs and movies collection. One of his friends wants this collection. Now A will write this collection on a CD and give it to his friend. Suppose another friend of A needs the same collection. Now since A already has this set ready, he will copy it again to a new CD and give it to anyone who wants it. Each copy of data is called an image.

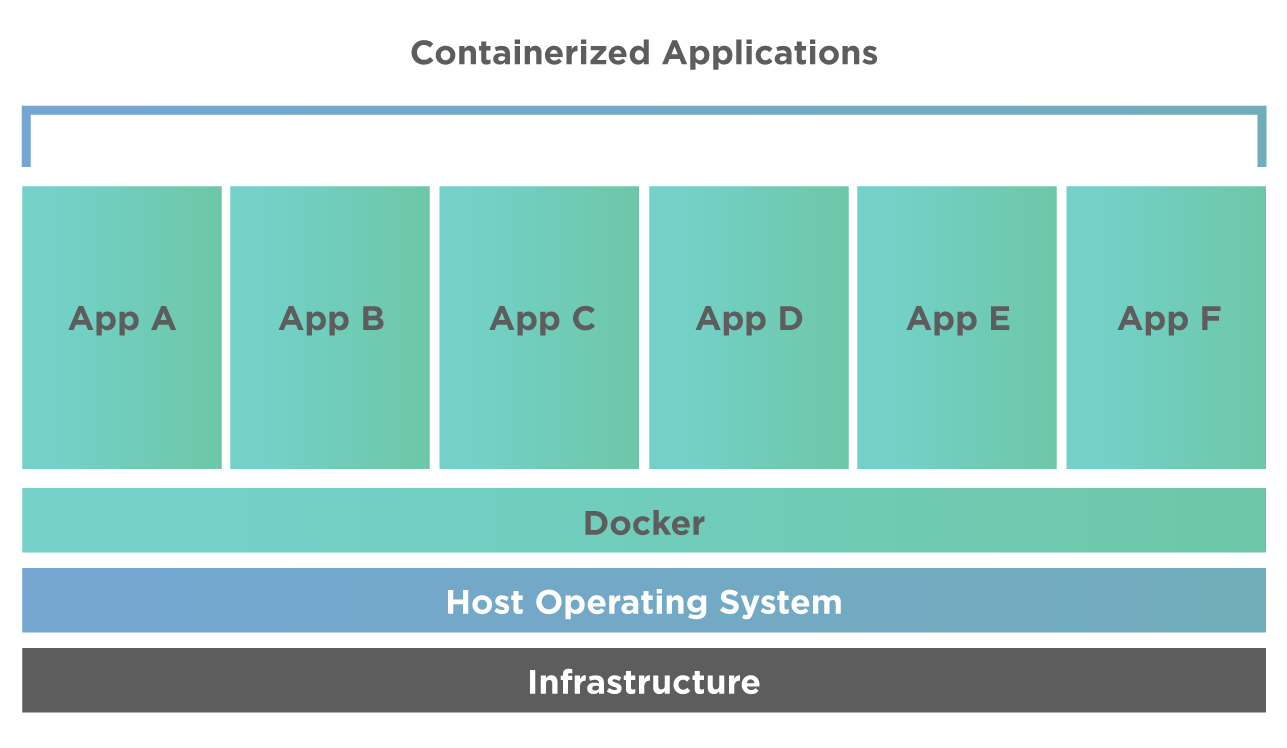

The diagram below shows such a system. Of course, each machine will have more than one such bundle or image.

The above diagram shows the units viz. App A, App B, and so on are all Docker images. These are nothing but copies of the same application. As we will see later in this series, images are kind of static units. If we need to execute or run any image, we need to associate them with another unit called a container.

This way, we could have many servers and several images in each of these servers. Then, when one breaks down, we just pick the image from other servers, restore it and get it working.

It is precisely what a container does. Containerization (the process of using containers) helps us manage our application and also bundle it along with its dependencies, code, binaries into an image. Then like real-time cargo containers, it allows us to pack, ship, modify, delete, etc.

Using containers, we use Docker images that we can just deploy on the host machine. If the application breaks due to some reason, we can easily deploy a copy of the same image. So containerization helps us troubleshoot easily.

Unlike virtualization, Containerization is efficient and fast. So whenever we need performance, we can employ containerization.

What is a Container?

Containerization is the technique of using containers to deploy various applications.

So what is a container?

A container, in technical terms, is a standard unit of software in which we pack the application source code and all its dependencies such that the application runs smoothly and reliably irrespective of the computing environment we use.

For example, in the real world, we have shipping containers that transport goods worldwide. Each of these shipping containers has standard dimensions, and all have numbering on them. We can load or unload goods, stack these containers, transport them over regions or even transfer them from one mode of transport to another, like from container ships to railway flatcars, without opening them or knowing what is inside them. The system handling these containers is entirely mechanized, and cranes do all the work.

The use of containers and their mechanized handling reduces the transport and storing cost as well as congestion at ports.

Like shipping containers, Docker has individual software units that pack the source code and all its dependencies to transfer this unit to another computing environment or from one machine to another. In Docker terms as well, these units are called containers. Therefore, we can visualize the Docker containers to be the same as shipping containers doing all the operations of load/unload, stack, transport, transfer, etc.

A container executes on Linux (native system) and shares the host machine kernel with other containers on that system. Containers are discreet and lightweight; they take no additional memory other than for executable.

A fundamental definition of the container is that it is a running process, with some features encapsulated into it to keep it isolated from other containers and to some extent from the host as well.

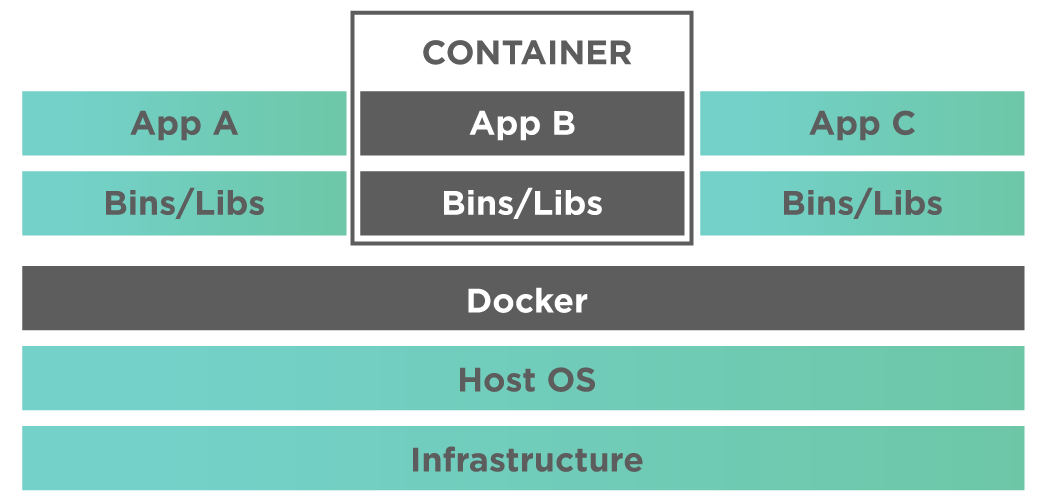

A typical container is as below.

In the picture above, we can see that every container is an independently deployed unit because of its binaries and libraries.

Containers are also organizational units of Docker, a popular development environment platform. When we build an image in Docker and run it, we do it in a container. So we can create it, move it, ship it, or destroy it just like any cargo ship does with any container.

If a Docker image is a template, then the container is a copy of that image or template. Thus, we can have multiple copies or containers of the same image.

In a system, each container functions or interacts with its own private space and file system. This file system is provided to the container by image. So an image that is part of the container includes everything we need to run an application – application binaries, code, dependencies, runtime environment, etc. - the code or binary, runtimes, dependencies, and any other file system objects required/ needed.

Containers are lightweight. They run a discrete process and do not take more memory than any other normal executable as a container natively runs on Linux and shares the host machine's kernel.

For example, using LXCs (Linux Containers), we can transform the way we scale and run the applications. The LXC project makes base OS container templates available to developers and provides a comprehensive set of tools for container lifecycle management.

We have two types of containers:

- Docker containers

- Linux Containers or LXCs.

We will learn docker containers in detail in upcoming articles. For now, let us briefly discuss LXCs.

What is LXC - Linux Container Project?

Containers are not a new concept. Linux, as well as the Docker platform, use it. Let us compare the Linux Containers (LXCs) and Docker containers in this section.

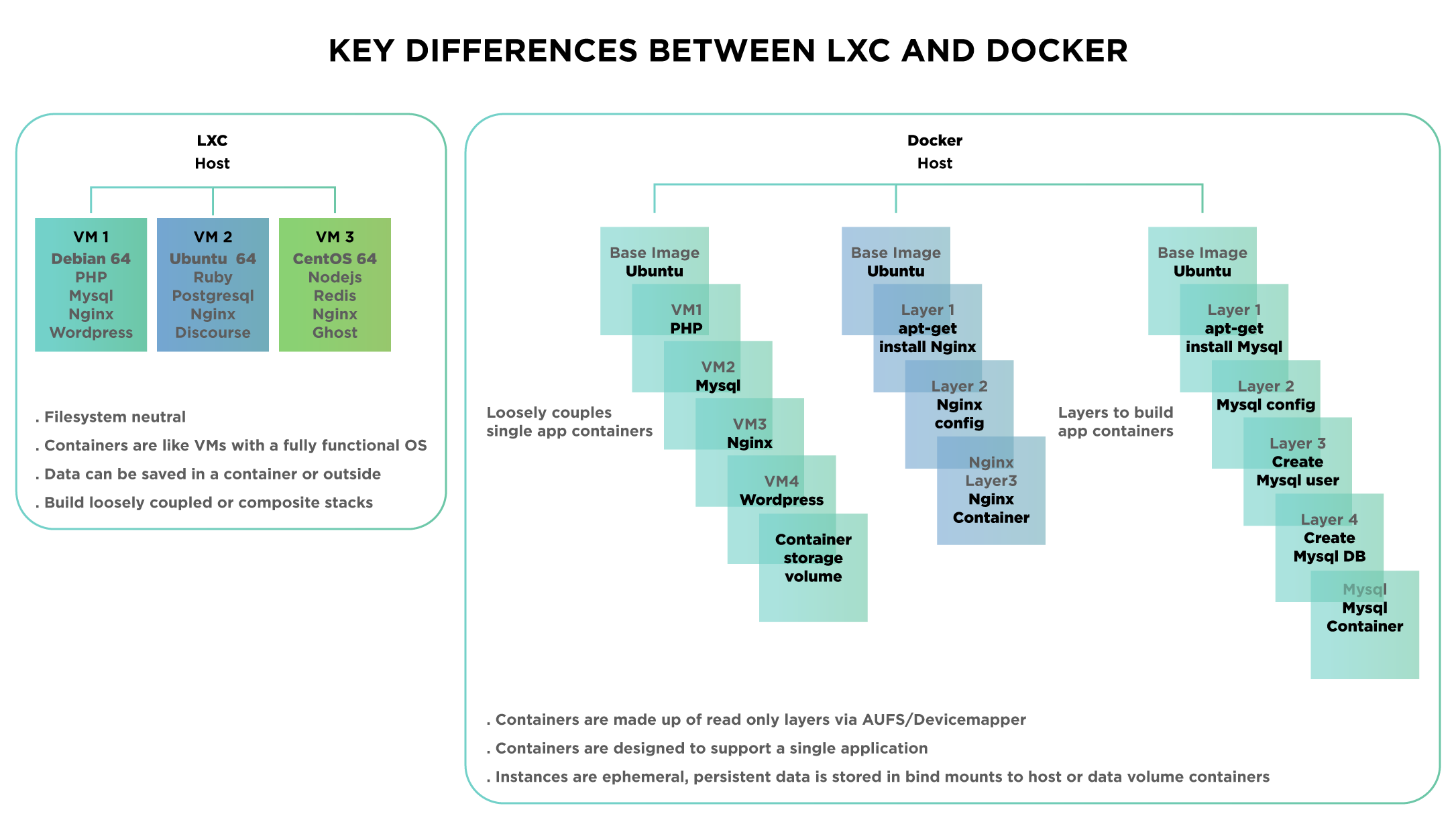

The following diagram shows the comparison between LXCs and docker containers.

LXC is a container technology and provides us with various lightweight Linux containers. On the other hand, Docker is a container-based technology, and we can view it as a single application virtualization engine.

We can not treat Docker containers as lightweight VMs like LXCs. It is because they are mostly restricted to a single application.

We can log in to the LXC container, treat it like OS, and even install applications and services. It will work like a machine as expected. But we cannot do such a thing for Docker containers. It means we cannot log in to Docker container like we do in LXC and work there as we can in a Linux Container. So while we can view LXC as a machine in itself, it is not the case with Docker.

The Docker does not have support for services, daemons, Syslog, cron jobs, etc. But LXCs have all these.

When Should we choose LXCs or Docker containerization?

There is no right or wrong approach when it comes to containers and which we should choose. It all depends on the users. The docker approach is unique and necessitates custom approaches. Finally, we find the docker way of accomplishing tasks from installing, running to scaling containers at every stage.

At the same time, LXC gives us a wide range of capabilities and freedom to architect and run our containers.

When should we use Containerization?

Some of the reasons we use the containers are:

- Containers offer logical packaging mechanisms for applications. Moreover, we can abstract the applications from the environment they are run in.

- The container-based applications can deploy anywhere regardless of whether the target environment is a developer’s laptop, public cloud, or a private center. It is because applications decouple from their environments.

- Containers allow developers to create environments that isolate from the rest of the applications and are predictable and executed anywhere.

- Container-based applications are portable and also are more efficient. It also results in better utilization of machine resources.

Containerization vs. Virtualization

Though both Containers and Virtual Machines let us run multiple OS inside a host machine, VMs creates many guest OSs on the host machine, as we can see from the diagram in the previous sections. Containerization, on the other hand, does not create multiple OSs. Instead, it creates numerous containers for each application.

The following table shows these differences between Container and VM.

| Container | Virtual Machine (VM) |

|---|---|

| Creates multiple containers on the host machine | It creates multiple guest Operating Systems (OS) on the host machine. |

| Containers are lightweight. | VMs are heavyweight. |

| Containers do not take extra memory apart from executing an application. | There is a lot of memory overhead as VMs share most of the resources of the host machine. |

| Containers are more portable, faster, and scale more efficiently. | VMs are slower and less efficient. |

Key TakeAways

- Before the advent of containers, deploying the application and then maintaining it was a lengthy, cumbersome process and often resulted in errors.

- It was difficult to track the errors, especially in large systems wherein too many machines we involved.

- These problems partly overcame by virtualization, capable of running multiple Operating systems on a single host machine. But the performance is a big problem in this case.

- Containers solved all these problems. Containers bundle the application binaries, their dependencies, and other required files into a single image.

- Whenever we want an application on the host machine, we deploy this image in a container.

- And when the application breaks or any other problem arises, we can directly replace another copy.

- Also, since the containers are independent, there is not much stress on the host machine as far as resource sharing is concerned, and also, performance is faster.

- Linux Containers (LXCs) and also the Docker applications mainly use the Containers.